The U.S. federal government faces a growing imperative to modernize systems and services using AI. Federal agencies are actively seeking pathways to integrate AI while preserving oversight, mission alignment, and public trust.

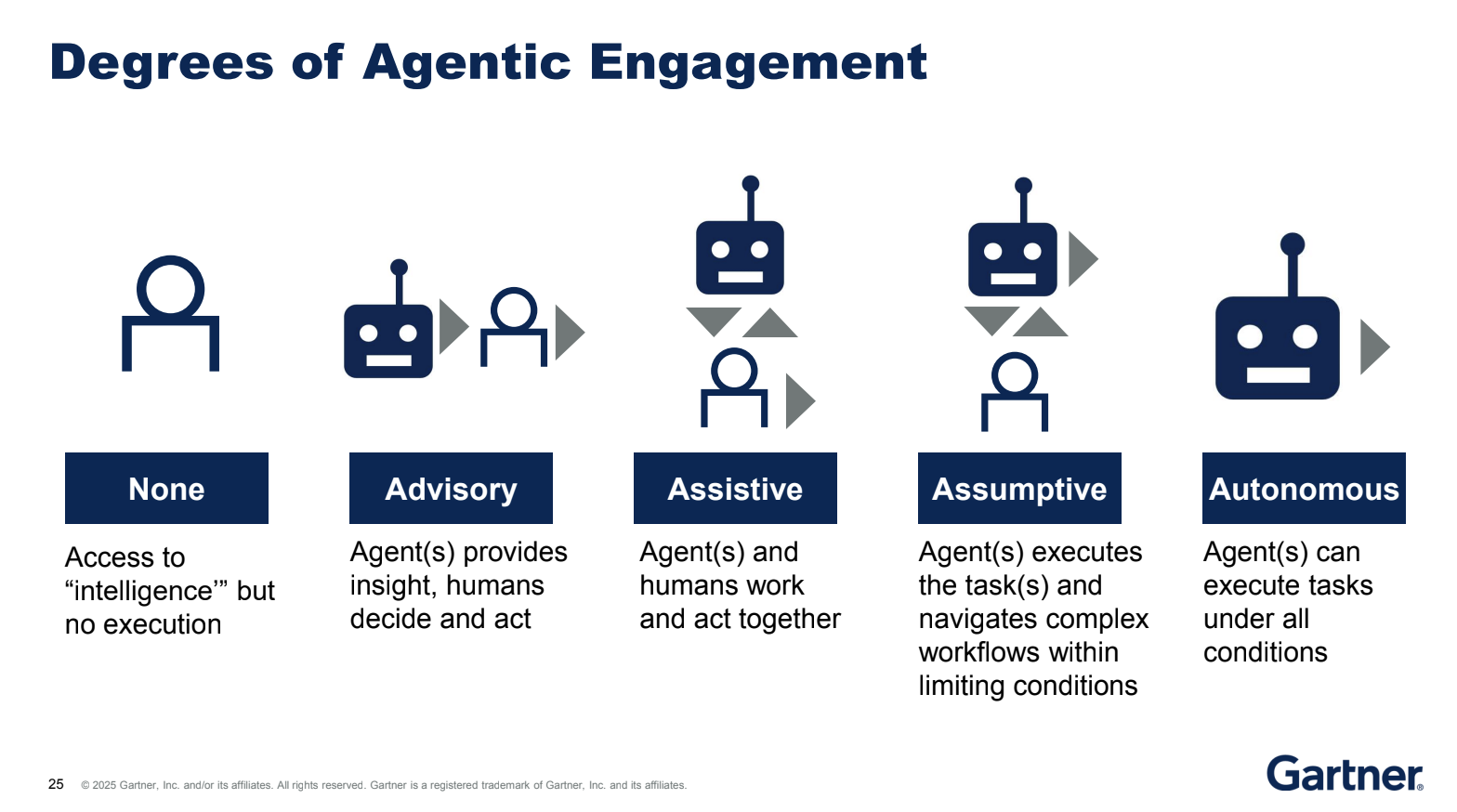

Agentic AI, which refers to AI systems capable of initiating and executing tasks on behalf of users, offers immense potential across public sector operations. However, adoption should not be perceived as a binary shift from human to machine. Instead, agency leaders should understand agentic capability as a continuum. Let’s explore the five degrees of agentic engagement, grounded in Gartner’s model, and a framework for incremental and ethical adoption.

The Five Degrees of Agentic Engagement: A Practical Spectrum for Federal Adoption

Agentic AI is not a one-size-fits-all solution. According to Gartner’s “Degrees of Agentic Engagement” model above, AI systems can operate at varying levels of autonomy. These range from passive tools that generate insights to fully autonomous systems capable of independently executing complex workflows. Understanding this spectrum is critical for government agencies designing AI strategies that align with oversight, transparency, and operational constraints.

- None – Insight Without Execution

At this level, AI tools provide access to information without taking any action. These are common in federal contexts such as dashboards, document repositories, and static data visualizations.

Example: FOIA dashboards that present document search results, requiring human agents to interpret and act on findings.

- Advisory – AI Offers Recommendations, Humans Act

Advisory systems deliver data-driven insights or recommendations. However, humans remain fully responsible for decisions and task execution. Example: AI-generated legal briefings or risk assessments for policymakers, who retain full authority over decisions. - Assistive – Human-in-the-Loop Collaboration

AI agents and humans jointly perform tasks. The AI assists by automating subtasks, while final control remains with humans. This level aligns well with federal compliance, accountability, and risk management requirements.

Example: AI tools that pre-screen benefits applications or suggest contract matches for procurement officers. - Assumptive – AI Executes Within Defined Limits

At this stage, agentic systems execute entire tasks within predefined rules and workflows, minimizing human involvement while maintaining operational boundaries.

Example: AI agents that adjudicate routine visa applications using a pre-validated ruleset. - Autonomous – AI Acts Independently Across Conditions

Fully autonomous systems function without human input, making dynamic decisions in real time. These systems are powerful but raise significant concerns regarding accountability, transparency, and safety.

Example: AI-driven logistics agents coordinating military supply chains under dynamic threat conditions.

Understanding these degrees of agency allows leaders to strategically plan their AI adoption based on organizational maturity, policy constraints, and mission-critical needs. Agencies are not expected to leap from advisory to autonomous overnight. Rather, adopting assistive or advisory-level agents can drive significant gains in productivity, consistency, and decision support while remaining aligned with existing governance frameworks like the DHS AI Playbook.

Why “Assistive” is the Sweet Spot for Federal Missions

The assistive level represents a pragmatic entry point for agencies pursuing AI adoption. At this stage, AI tools help manage complexity and scale without ceding authority or violating governance protocols. For many federal agencies, this level offers an ideal balance: automation of rote tasks without compromising human oversight.

The benefits of this approach are both operational and ethical. From an operational standpoint, assistive AI helps federal employees process large volumes of information more efficiently and with greater accuracy. For example, in environmental regulation, AI can analyze geospatial data to identify at-risk areas, helping experts prioritize inspections. In veterans’ services, large language models can assist in drafting benefit explanations tailored to individual cases.

Equally important, assistive systems reinforce accountability by maintaining human control. Since AI acts in a supporting role, its outputs can be reviewed, corrected, or overridden. This aligns well with the NIST AI Risk Management Framework, which promotes transparency, explainability, and a human-centered design philosophy. For mission areas involving public safety, social welfare, or legal interpretation, these safeguards are not just desirable—they are necessary.

Assistive AI offers a practical, impactful, and ethically sound path forward for federal missions. It reduces workload and enhances service delivery, while empowering humans to focus on tasks that require nuance, empathy, or discretion.

Human-in-the-Loop (HITL) Design in Advisory and Assistive Systems

HITL design is central to ensuring that AI applications operate responsibly and align with public interest in high-stakes environments like federal governance. At the advisory and assistive levels, HITL provides a framework where human oversight is maintained over AI-supported actions, ensuring interpretability, accountability, and value alignment.

For instance, consider an IRS application where machine learning systems are used to identify potential tax discrepancies. These agents might flag returns with abnormal deductions, but trained IRS personnel still make the final determination. This preserves fairness while enabling efficiency. Similarly, in immigration case management, AI may extract and summarize complex case histories, but immigration officers use this data as one input among many in their decision-making process.

The benefit of HITL in such settings is that it mitigates risks associated with biased training data or unforeseen edge cases—unusual scenarios not well represented in the system’s training data. By keeping a human in the loop, the system can generate insights and suggestions while still allowing human values and judgment to drive the outcome. Moreover, HITL supports compliance with the principles outlined in the DHS AI Playbook and aligns with government-wide initiatives such as those tracked at whitehouse.gov.

Readiness Indicators for Progressing Up the Agentic Ladder

As federal agencies consider adopting more capable agentic systems, assessing organizational readiness becomes essential. Four key dimensions serve as readiness indicators:

- Data Infrastructure Maturity

Is your agency able to feed secure, high-quality data to AI agents in real time? Advanced agentic systems rely on real-time, high-quality, and well-governed datasets. Without standardized and reliable data inputs, agents will either produce flawed outputs or remain underutilized. Agencies must evaluate the completeness, security, and accessibility of their data repositories. - Governance and Compliance Readiness

Are there frameworks for auditing models, logging agent behavior, and enforcing usage limits? Before deploying agents with greater autonomy, agencies need frameworks for validating outputs, logging decisions, and detecting drift in model performance. Governance tools such as audit trails, version control for models, and automated red-teaming simulations can be used to assure compliance with federal mandates and the NIST AI RMF. - Workforce Capability

Do personnel have the skills to supervise and collaborate with AI systems? This goes beyond technical skills; it includes training in ethical decision-making, human-machine teaming, and procedural oversight. Agencies that embed AI fluency into their workforce development will be better positioned to safely scale their agentic capabilities. - Operational Risk Profile

Can your mission tolerate automation in high-volume or sensitive decision-making areas? For tasks involving financial distribution, security, or legal consequences, agencies may be more cautious, keeping AI agents confined to advisory or assistive roles. For routine administrative workflows with minimal downstream impact, higher levels of agency might be safely piloted.

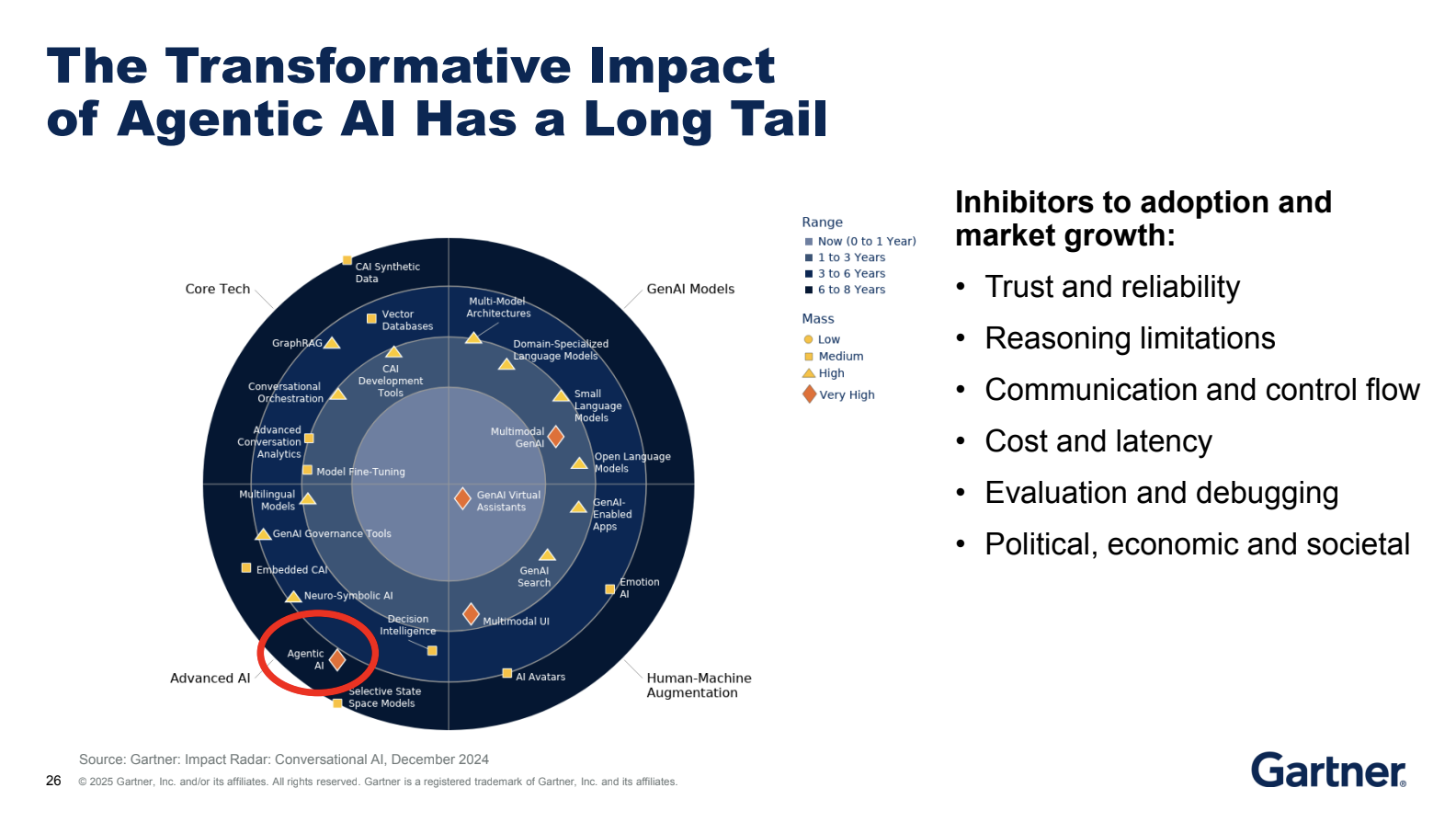

Barriers to Adoption and the Long Tail of Transformative Impact

Despite its promise, the adoption of agentic AI in federal environments faces significant barriers. Adoption is slowed by several key inhibitors:

- Trust and Reliability: Lack of transparency in model outputs undermines confidence.

- Reasoning Limitations: Agents often struggle with nuance and exception handling.

- Communication Flow: Orchestration of agent hand-offs remains immature.

- Cost and Latency: Running large-scale agents can be expensive and compute-intensive.

- Evaluation and Debugging: Difficult to trace agent errors without robust observability.

- Political and Societal Constraints: Public acceptance and regulatory compliance introduce friction.

According to Gartner’s Impact Radar, agentic AI will have a very high impact within the next three to six years, but realizing this impact will take sustained investment and change management.

For agencies, this underscores the importance of starting with pilot programs, validating small use cases, and building toward greater autonomy over time. Federal programs like the National AI Research Resource Pilot offer valuable platforms to experiment with advanced AI capabilities in a controlled setting.

Conclusion

Agentic AI is not about replacing federal workers or fully automating the government. It is about enabling intelligent systems to assist, augment, and scale public services responsibly. By understanding the five degrees of agentic engagement, federal leaders can adopt AI strategically, build public trust, and deliver better mission outcomes. The goal is not full autonomy today—it’s thoughtful progression with purpose and accountability.

Partner with Techsur Solutions to operationalize Agentic AI tailored to your agency’s mission—bridging strategy, compliance, and innovation for scalable impact.